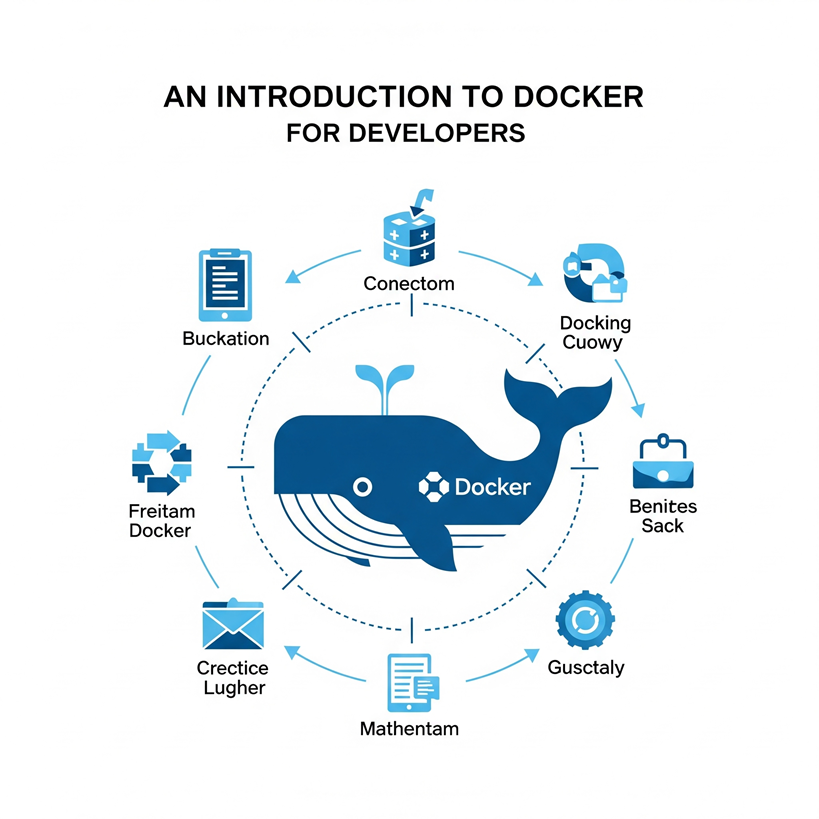

Picture this: you've just finished building an awesome web application on your local machine. Everything works perfectly. You're ready to deploy, but then your teammate tries to run it and gets a dozen dependency errors. Sound familiar? That's where Docker comes in to save the day.

Docker has revolutionized how we develop, ship, and run applications. It's like having a magic box that packages your entire application with everything it needs to run - code, runtime, system tools, libraries, and settings. No more "it works on my machine" excuses!

What Exactly Is Docker?

Think of Docker as a lightweight virtualization platform. While traditional virtual machines virtualize entire operating systems, Docker containers share the host OS kernel but isolate the application processes. This makes containers much faster to start and more resource-efficient than VMs.

At its core, Docker uses three main components:

- Images - Read-only templates that contain your application and its dependencies

- Containers - Running instances of Docker images

- Dockerfile - Text files with instructions to build Docker images

- Docker Hub - Cloud-based registry for sharing container images

Your First Docker Container

Let's jump right into creating your first Docker container. We'll start with a simple Node.js application to demonstrate the basics.

// app.js

const express = require('express');

const app = express();

const PORT = process.env.PORT || 3000;

app.get('/', (req, res) => {

res.json({

message: 'Hello from Docker!',

environment: process.env.NODE_ENV || 'development',

timestamp: new Date().toISOString()

});

});

app.get('/health', (req, res) => {

res.status(200).json({ status: 'healthy' });

});

app.listen(PORT, '0.0.0.0', () => {

console.log(`Server running on port ${PORT}`);

});

Now here's where the magic happens. Instead of asking your teammates to install Node.js, npm, and figure out which version you're using, we'll create a Dockerfile:

# Use the official Node.js runtime as base image

FROM node:18-alpine

# Set working directory inside the container

WORKDIR /usr/src/app

# Copy package.json and package-lock.json (if available)

COPY package*.json ./

# Install dependencies

RUN npm ci --only=production

# Copy application code

COPY . .

# Create a non-root user to run the app

RUN addgroup -g 1001 -S nodejs

RUN adduser -S nextjs -u 1001

USER nextjs

# Expose the port the app runs on

EXPOSE 3000

# Define the command to run the application

CMD ["node", "app.js"]

The beauty of Docker isn't just in solving dependency issues. It's about creating reproducible environments that work the same way whether you're developing locally, running tests in CI, or deploying to production.

Personal Experience

Building and Running Your Container

With your Dockerfile ready, building and running your container is straightforward. Here are the essential Docker commands every developer should know:

# Build the Docker image

docker build -t my-node-app:v1.0 .

# Run the container

docker run -d \

--name my-app-container \

-p 3000:3000 \

-e NODE_ENV=production \

my-node-app:v1.0

# View running containers

docker ps

# Check container logs

docker logs my-app-container

# Execute commands inside running container

docker exec -it my-app-container sh

# Stop and remove container

docker stop my-app-container

docker rm my-app-container

Multi-Container Applications with Docker Compose

Real applications rarely exist in isolation. You probably need a database, maybe Redis for caching, and perhaps a reverse proxy. Docker Compose lets you define and run multi-container applications with ease.

# docker-compose.yml

version: '3.8'

services:

web:

build: .

ports:

- "3000:3000"

environment:

- NODE_ENV=production

- DATABASE_URL=postgresql://user:password@db:5432/myapp

- REDIS_URL=redis://redis:6379

depends_on:

- db

- redis

volumes:

- ./logs:/usr/src/app/logs

db:

image: postgres:15-alpine

environment:

- POSTGRES_DB=myapp

- POSTGRES_USER=user

- POSTGRES_PASSWORD=password

volumes:

- postgres_data:/var/lib/postgresql/data

ports:

- "5432:5432"

redis:

image: redis:7-alpine

ports:

- "6379:6379"

volumes:

- redis_data:/data

nginx:

image: nginx:alpine

ports:

- "80:80"

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf

depends_on:

- web

volumes:

postgres_data:

redis_data:

With this compose file, you can spin up your entire application stack with just one command: docker-compose up -d. Your app, database, cache, and web server all start together and can communicate with each other seamlessly.

Docker Best Practices for Developers

After working with Docker in production for several years, I've learned some hard lessons. Here are the practices that'll save you headaches down the road:

- Use multi-stage builds to keep your images lean and secure

- Don't run containers as root - create dedicated users for better security

- Leverage .dockerignore to exclude unnecessary files from your build context

- Use specific image tags instead of 'latest' to ensure reproducible builds

- Implement proper health checks for your containers

- Use secrets management instead of environment variables for sensitive data

Here's an example of a production-ready Dockerfile that follows these best practices:

# Multi-stage build for a Python Flask application

FROM python:3.11-slim as builder

# Install build dependencies

RUN apt-get update && apt-get install -y \

build-essential \

&& rm -rf /var/lib/apt/lists/*

# Set working directory

WORKDIR /app

# Copy requirements and install Python dependencies

COPY requirements.txt .

RUN pip install --user --no-cache-dir -r requirements.txt

# Production stage

FROM python:3.11-slim

# Install runtime dependencies

RUN apt-get update && apt-get install -y \

&& rm -rf /var/lib/apt/lists/* \

&& apt-get clean

# Create non-root user

RUN groupadd -r appuser && useradd -r -g appuser appuser

# Copy Python packages from builder stage

COPY --from=builder /root/.local /home/appuser/.local

# Set working directory

WORKDIR /app

# Copy application code

COPY --chown=appuser:appuser . .

# Switch to non-root user

USER appuser

# Add local bin to PATH

ENV PATH=/home/appuser/.local/bin:$PATH

# Expose port

EXPOSE 5000

# Health check

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \

CMD curl -f http://localhost:5000/health || exit 1

# Start application

CMD ["gunicorn", "--bind", "0.0.0.0:5000", "app:app"]

Debugging Docker Issues

Docker containers can sometimes feel like black boxes when things go wrong. Here's how I approach debugging container issues:

# Check what's happening inside a running container

docker exec -it container_name bash

# View detailed container information

docker inspect container_name

# Monitor container resource usage

docker stats

# View container filesystem changes

docker diff container_name

# Export container filesystem for analysis

docker export container_name > container_backup.tar

# Check Docker daemon logs

sudo journalctl -u docker.service

# Clean up unused resources

docker system prune -a

One thing that took me way too long to learn is the importance of proper logging. Always configure your applications to log to stdout/stderr so Docker can capture and manage your logs properly.

Don't try to debug a container by ssh-ing into it. Containers should be treated as immutable infrastructure. If something's wrong, fix the image and redeploy rather than patching a running container.

DevOps Best Practice

Performance Optimization Tips

Docker containers can be incredibly efficient, but there are several optimization techniques that make a huge difference in real-world applications:

- Use Alpine Linux base images to minimize size and attack surface

- Implement proper layer caching by ordering Dockerfile instructions strategically

- Use .dockerignore to prevent unnecessary files from bloating your build context

- Configure appropriate resource limits to prevent containers from consuming excessive resources

- Implement container orchestration with proper scaling policies

Here's a practical example of optimizing a typical web application container:

# Before optimization - inefficient layering

FROM node:18

WORKDIR /app

COPY . .

RUN npm install

EXPOSE 3000

CMD ["npm", "start"]

# After optimization - better caching and smaller image

FROM node:18-alpine as dependencies

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

FROM node:18-alpine as runtime

RUN addgroup -g 1001 -S nodejs

RUN adduser -S nextjs -u 1001

WORKDIR /app

COPY --from=dependencies /app/node_modules ./node_modules

COPY --chown=nextjs:nodejs . .

USER nextjs

EXPOSE 3000

HEALTHCHECK --interval=30s CMD node healthcheck.js

CMD ["node", "server.js"]

The key insight here is that Docker builds layers incrementally. By copying package.json first and running npm install before copying your source code, you ensure that dependency installation only happens when your dependencies change, not every time you modify your application code.

Integration with Development Workflow

Docker really shines when it becomes part of your development workflow. Here's how I integrate Docker into daily development:

# Development docker-compose.override.yml

version: '3.8'

services:

web:

build:

context: .

target: development

volumes:

- .:/app

- /app/node_modules

environment:

- NODE_ENV=development

- DEBUG=app:*

command: npm run dev

db:

ports:

- "5432:5432"

environment:

- POSTGRES_DB=myapp_dev

# Makefile for common development tasks

.PHONY: dev build test clean

dev:

docker-compose -f docker-compose.yml -f docker-compose.override.yml up

build:

docker-compose build --no-cache

test:

docker-compose exec web npm test

clean:

docker-compose down -v

docker system prune -f

This setup gives you the best of both worlds - consistent environments across your team while maintaining the fast feedback loops you need during development. File changes are reflected immediately thanks to volume mounting, but the entire stack runs in containers.

Docker has transformed how we think about application deployment and development environments. What used to require complex setup documentation and hours of environment configuration now takes minutes. The consistency Docker provides between development, testing, and production environments eliminates entire classes of bugs.

Whether you're building microservices, monoliths, or anything in between, Docker provides the foundation for modern application development. Start small with a simple Dockerfile, then gradually adopt more advanced patterns like multi-stage builds and orchestration as your needs grow.

The learning curve might seem steep at first, but once Docker clicks, you'll wonder how you ever developed applications without it. Your future self (and your teammates) will thank you for making the investment in learning this essential tool.

0 Comment