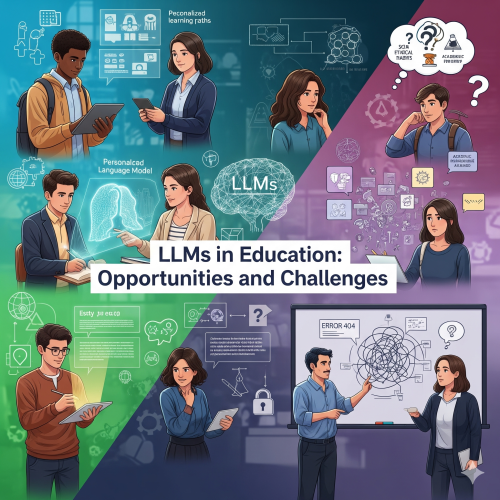

Remember when the biggest tech controversy in classrooms was whether calculators would make students "lazy" at math? Well, hold onto your textbooks, because Large Language Models (LLMs) like ChatGPT, Claude, and Gemini are here, and they're making that calculator debate look like a friendly disagreement about pencil vs. pen.

As someone who's spent countless hours both teaching and learning alongside these AI tools, I can tell you that LLMs in education aren't just another passing fad. They're fundamentally reshaping how we think about learning, teaching, and knowledge itself. But like any powerful tool, they come with both incredible opportunities and significant challenges that we need to navigate carefully.

The Game-Changing Opportunities

Let's start with the exciting stuff. LLMs are creating educational opportunities that would have seemed like science fiction just a few years ago. The most obvious benefit? Personalized tutoring at scale. Imagine having a patient, knowledgeable tutor available 24/7, one that never gets tired of explaining the same concept in different ways until it clicks.

I've watched students who struggled with traditional teaching methods suddenly light up when an LLM explains calculus using analogies from their favorite video games, or breaks down Shakespeare using modern slang they actually understand. The ability to adapt explanations to individual learning styles and interests is genuinely revolutionary.

Practical Applications That Are Working Right Now

- Instant feedback on writing assignments, helping students improve their prose in real-time

- Code review and debugging assistance for programming students, acting like a senior developer mentor

- Language learning conversation partners that never judge pronunciation mistakes

- Research assistants that can help synthesize information from multiple sources

- Accessibility tools that can convert text to different formats for diverse learning needs

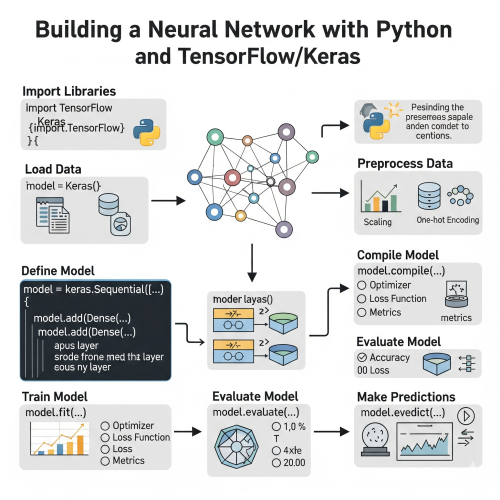

But here's where it gets really interesting from a technical perspective. Let me show you how we might implement a simple educational chatbot using Python and OpenAI's API:

import openai

import json

from typing import List, Dict

from dataclasses import dataclass

@dataclass

class StudentProfile:

name: str

grade_level: int

learning_style: str # visual, auditory, kinesthetic

subjects_of_interest: List[str]

difficulty_level: str # beginner, intermediate, advanced

class EducationalAssistant:

def __init__(self, api_key: str):

self.client = openai.OpenAI(api_key=api_key)

self.conversation_history = []

def personalize_explanation(self, topic: str, student: StudentProfile) -> str:

"""

Generate personalized explanations based on student profile

"""

system_prompt = f"""

You are an educational assistant helping {student.name},

a {student.grade_level}th grader who learns best through {student.learning_style} methods.

Their interests include: {', '.join(student.subjects_of_interest)}.

Adjust your explanation to their {student.difficulty_level} level.

"""

try:

response = self.client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": f"Explain {topic} in a way I'll understand"}

],

temperature=0.7,

max_tokens=500

)

explanation = response.choices[0].message.content

self.conversation_history.append({

"topic": topic,

"explanation": explanation,

"student_profile": student.__dict__

})

return explanation

except Exception as e:

return f"Sorry, I encountered an error: {str(e)}"

def assess_understanding(self, topic: str, student_response: str) -> Dict:

"""

Evaluate student understanding and provide feedback

"""

assessment_prompt = f"""

Analyze this student response about {topic}: "{student_response}"

Provide feedback in JSON format:

{{

"understanding_level": "poor/fair/good/excellent",

"strengths": ["list", "of", "strengths"],

"areas_for_improvement": ["list", "of", "areas"],

"next_steps": "suggested next learning steps",

"encouragement": "positive, motivating message"

}}

"""

try:

response = self.client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": assessment_prompt}],

temperature=0.3

)

return json.loads(response.choices[0].message.content)

except Exception as e:

return {"error": f"Assessment failed: {str(e)}"}

# Usage example

assistant = EducationalAssistant("your-api-key-here")

student = StudentProfile(

name="Alex",

grade_level=10,

learning_style="visual",

subjects_of_interest=["physics", "video games", "art"],

difficulty_level="intermediate"

)

# Generate personalized explanation

explanation = assistant.personalize_explanation("quantum physics", student)

print(f"Explanation for {student.name}:")

print(explanation)

# Assess student understanding

student_answer = "Quantum physics is like when particles can be in multiple places at once, kind of like a character in a video game before you observe them?"

assessment = assistant.assess_understanding("quantum physics", student_answer)

print(f"\nAssessment: {assessment}")

This code demonstrates how we can create adaptive learning experiences that adjust to individual student needs. The StudentProfile dataclass captures key learning characteristics, while the EducationalAssistant uses these to generate personalized explanations and assess understanding.

The Challenges We Can't Ignore

Now, let's talk about the elephant in the room – or should I say, the hallucinating robot in the classroom? LLMs aren't perfect, and their imperfections can be particularly problematic in educational settings where accuracy is paramount.

The biggest challenge isn't that LLMs might give wrong answers – it's that they give wrong answers with such confidence that both students and teachers might not question them.

Dr. Sarah Chen, Educational Technology Researcher

I've seen students submit essays with completely fabricated citations that sounded so legitimate, they fooled the initial grading process. The model had confidently cited non-existent research papers and made up statistics that supported the student's argument perfectly – perhaps too perfectly.

Academic Integrity in the Age of AI

The academic integrity conversation is complex and evolving rapidly. Traditional plagiarism detection tools are scrambling to keep up, and educators are grappling with fundamental questions: When does using an LLM constitute cheating? How do we distinguish between appropriate AI assistance and over-reliance?

- Students using AI to generate entire assignments without understanding the content

- Difficulty in distinguishing AI-generated content from student work

- The need for new assessment methods that account for AI assistance

- Evolving policies around acceptable AI use in academic settings

- Training educators to recognize and address AI-assisted work appropriately

Some institutions are banning AI tools outright, while others are embracing them and teaching students how to use them responsibly. The latter approach seems more realistic – these tools aren't going away, so we might as well learn to work with them ethically.

Building Responsible AI Integration

Here's a practical framework I've developed for integrating LLMs responsibly in educational settings. This JavaScript implementation helps track and monitor AI usage:

class ResponsibleAITracker {

constructor() {

this.usageLog = [];

this.guidelines = {

maxAIAssistancePercentage: 30,

requiredHumanVerification: true,

mandatoryCitation: true,

reflectionRequired: true

};

}

logAIUsage(studentId, assignment, aiPrompt, aiResponse, humanContribution) {

const usage = {

timestamp: new Date().toISOString(),

studentId: studentId,

assignment: assignment,

aiPrompt: aiPrompt,

aiResponseLength: aiResponse.length,

humanContributionLength: humanContribution.length,

aiPercentage: this.calculateAIPercentage(aiResponse, humanContribution),

verified: false,

cited: false,

reflected: false

};

this.usageLog.push(usage);

return usage;

}

calculateAIPercentage(aiResponse, humanContribution) {

const totalContent = aiResponse.length + humanContribution.length;

return totalContent > 0 ? (aiResponse.length / totalContent) * 100 : 0;

}

validateUsage(usageId) {

const usage = this.usageLog.find(log => log.id === usageId);

if (!usage) return { valid: false, reason: "Usage not found" };

const violations = [];

if (usage.aiPercentage > this.guidelines.maxAIAssistancePercentage) {

violations.push(`AI assistance (${usage.aiPercentage.toFixed(1)}%) exceeds limit`);

}

if (this.guidelines.requiredHumanVerification && !usage.verified) {

violations.push("Human verification required");

}

if (this.guidelines.mandatoryCitation && !usage.cited) {

violations.push("AI assistance must be cited");

}

if (this.guidelines.reflectionRequired && !usage.reflected) {

violations.push("Reflection on AI assistance required");

}

return {

valid: violations.length === 0,

violations: violations,

recommendations: this.generateRecommendations(usage)

};

}

generateRecommendations(usage) {

const recommendations = [];

if (usage.aiPercentage > 20) {

recommendations.push("Consider reducing AI dependency for deeper learning");

}

recommendations.push("Always verify AI-generated information with reliable sources");

recommendations.push("Reflect on how AI assistance enhanced your understanding");

return recommendations;

}

generateUsageReport(studentId, timeframe = 30) {

const cutoffDate = new Date();

cutoffDate.setDate(cutoffDate.getDate() - timeframe);

const studentLogs = this.usageLog.filter(log =>

log.studentId === studentId &&

new Date(log.timestamp) >= cutoffDate

);

const totalUsage = studentLogs.length;

const averageAIPercentage = studentLogs.reduce((sum, log) =>

sum + log.aiPercentage, 0) / totalUsage || 0;

return {

totalAIInteractions: totalUsage,

averageAIReliance: averageAIPercentage.toFixed(1) + '%',

complianceRate: this.calculateComplianceRate(studentLogs),

learningTrends: this.analyzeLearningTrends(studentLogs),

recommendations: this.generateStudentRecommendations(studentLogs)

};

}

calculateComplianceRate(logs) {

const compliantLogs = logs.filter(log =>

this.validateUsage(log.id).valid

);

return logs.length > 0 ? (compliantLogs.length / logs.length) * 100 : 100;

}

analyzeLearningTrends(logs) {

return logs.length >= 5 ? {

aiRelianceTrend: this.calculateTrend(logs.map(log => log.aiPercentage)),

engagementTrend: this.calculateEngagementTrend(logs),

subjectDistribution: this.getSubjectDistribution(logs)

} : "Insufficient data for trend analysis";

}

}

// Usage example

const tracker = new ResponsibleAITracker();

// Log AI usage

const usage = tracker.logAIUsage(

"student123",

"History Essay",

"Explain the causes of World War I",

"AI generated explanation about WWI causes...",

"Student's analysis and personal insights..."

);

// Validate usage

const validation = tracker.validateUsage(usage.id);

console.log("Usage validation:", validation);

// Generate student report

const report = tracker.generateUsageReport("student123");

console.log("Student AI Usage Report:", report);

The Human Element Can't Be Replaced

Despite all the excitement around AI, we need to remember that education is fundamentally about human connection and growth. LLMs can provide information and even simulate conversation, but they can't provide the empathy, creativity, and wisdom that come from human experience.

I've noticed that students who rely too heavily on AI assistance often struggle with critical thinking and original problem-solving. They become excellent at prompting and refining AI responses, but struggle when faced with novel situations that require genuine creativity and insight.

Looking Forward: A Balanced Approach

The future of LLMs in education isn't about replacing teachers or eliminating the need for human learning – it's about augmentation and enhancement. The most successful educational implementations I've seen treat AI as a powerful learning tool, not a replacement for fundamental educational processes.

- Teaching students to use AI as a research starting point, not the final answer

- Incorporating AI literacy into curricula across all subjects

- Developing new assessment methods that account for AI assistance

- Training educators to leverage AI tools effectively in their teaching

- Creating policies that promote responsible AI use while preventing over-dependence

We're living through a fascinating transition period in education. LLMs offer unprecedented opportunities to personalize learning, provide instant feedback, and make quality education more accessible. But they also challenge our fundamental assumptions about knowledge, learning, and academic integrity.

The goal isn't to create students who can prompt AI perfectly – it's to create critical thinkers who can leverage AI tools while maintaining their human capacity for creativity, empathy, and original thought.

My Teaching Philosophy

As we navigate this new landscape, the key is finding the sweet spot between embracing innovation and preserving the irreplaceable human elements of education. LLMs are powerful allies in the quest for better learning outcomes, but they work best when guided by human wisdom, creativity, and care.

The students sitting in classrooms today will inherit a world where AI collaboration is the norm, not the exception. Our job as educators is to prepare them not just to use these tools, but to use them thoughtfully, ethically, and in service of genuine learning and human flourishing. That's a challenge worth embracing.

0 Comment